Contents

- Intro

- RStudio

- Basic principles of

Rprogramming - Putting it all together

- Functions

- Making the computer do the heavy lifting

- Reshaping Data

- Data visualisation

- Useful tips

- Jargon dictionary

Intro

The first contact with R might feel a little overwhelming, especially if you haven’t done any programming before.

Some things are fairly easy to understand but others do not come naturally to people.

To help you with the latter group, here are some tips on how to approach talking to computers.

Jargon warning

It is unavoidable to include some technical language. At first, this may feel a little obscure but the vocab needed to understand this document is not large. Besides, learning the jargon will make it possible for you to put into words what it is you need to do and, if you’re struggling, ask for help in places such as Stack Overflow.

If you come across an unfamiliar term, feel free to consult the dictionary at the bottom of this document.

We’re smarter than them (for now)

Talking to a computer is can sometimes feel a little difficult. They are highly proficient at formal languages: they understands the vocab, grammar, and syntax perfectly well. They can do many complex things you ask them to do, so long as you are formal, logical, precise, and unambiguous. If you are not, they won’t be able to help you or worse, will do something wrong. When it comes to pragmatics, they are lost: they doesn’t understand context, metaphor, shorthand, or sarcasm. The truth is that they just aren’t all that smart so they need to be told what to do in a unambiguous way.

Keep this in mind whenever you’re working with R.

Don’t take anything for granted, don’t rely on R to make any assumptions about what you are telling it.

Certainly don’t try to be funny and expect R to appreciate it!

It takes a little practice to get into the habit of translating what you want to do into the dry, formal language of logic and algorithms but, on the flip side, once you get the knack of it, there will never be any misunderstanding on the part of the machine.

With people, there will always be miscommunication, no matter how clear you try to be, because people are very sensitive to context, paralinguistic and non-verbal cues, and the pragmatics of a language.

Computers don’t care about any of that and thus, if they’re not doing what you want them to, the problem is in the sender, not the receiver.1 That is exactly why they’re built like that!

Instead of thinking about computers as scary, abstruse, or boring, learn to appreciate this quality of precision as beautiful. That way, you’ll get much more enjoyment out of working with them.

Think algorithmically

By algorithmically, we mean in a precise step-by-step fashion where each action is equivalent to the smallest possible increment.

So, if you want a machine to make you a cup of tea, instead of saying: “Oi R! Fetch me a cuppa!”, you need to break everything down.

Something like this:

Go to kitchenIf no kitchen, jump to 53Else get kettleIf no kettle, jump to 53Check how much water in kettleIf is more or equal than 500 ml of water in kettle, jump to 15Place kettle under cold tapOpen kettle lidTurn cold tap onCheck how much water in kettleIf there is more than 500 ml of water in kettle, jump to 13Jump to 10Turn cold tap offClose kettle lidIf kettle is on base, jump to 17Place kettle on baseIf base is plugged in mains, jump to 19Plug base in mainsTurn kettle onGet cupIf no cup, jump to 53Get tea bag# yeah, we’re not poshIf no tea bag, jump to 53Put tea bag in cupIf kettle has boiled, jump to 27Jump to 25Start pouring water from kettle to cupCheck how much water in cupIf there is more than 225 ml of water in cup, jump to 31Jump to 28Stop pouring water from kettleReturn kettle on baseWait 4 minutesRemove tea bag from cupPlace tea bag by edge of sink# this is obviously your partner’s/flatmate’s programOpen fridgeSearch fridgeIf full fat milk is not in fridge, jump to 41Get full fat milk# full fat should be any sensible person’s top choice. True fact!Jump to 43If semi-skimmed milk is not in fridge, jump to 51# skimmed is not even an option!Get semi-skimmed milkTake lid of milkStart pouring milk in cupCheck how much liquid in cupIf more or equal to 250 ml liquid in cup, jump to 48Jump to 45Stop pouring milkPut lid on milkReturn milk to fridgeClose fridge# yes, we kept it open! Fewer steps (I’m not lazy, I’m optimised!)Bring cup# no need to stirEND

so there you go, a nice cuppa in 53 easy steps!

Obviously, this is not real code but something called pseudocode: a program-like set of instructions expressed in a more-or-less natural language. Surely, we could get even more detailed, e.g., by specifying what kind of tea we want, but 53 steps is plenty. Notice, however, that, just like in actual code, the entire process is expressed in a sequential manner. This sometimes means that you need to think about how to say things that would be very easy to communicate to a person (e.g., “Wait till the kettle boils”) in terms of well-defined repeatable steps. In our example, this is done with a loop:

If kettle has boiled, jump to 27Jump to 25Start pouring water from kettle to cup

The program will check if the kettle has boiled and, if it has, it will start pouring water into the cup. If it hasn’t boiled, it will jump back to 25. and check again if it has boiled. This is equivalent to saying “wait till the kettle boils”.

Note that in actual R code, certain expressions, such as jump to (or, more frequently in some older programming languages goto) are not used so you would have to find other ways around the problem, were you to write a tea-making program in R.

However, this example illustrates the sort of thinking mode you need to get into when talking to computers.

Especially when it comes to data processing, no matter what it is you want your computer to do, you need to be able to convey it in a sequence of simple steps.

Don’t be scared

It is quite normal to be a little over-cautious when you first start working with a programming language.

Rest assured that, when it comes to R, there is very little chance you’ll break your computer or damage your data, unless you set out to do so and know what you’re doing.

When you read in your data, R will copy it to the computer’s memory and only work with this copy.

The original data file will remain untouched.

So please, be adventurous, try things out, experiment, break thingst’s the best way to learn programming and understand the principles and the logic behind the code.

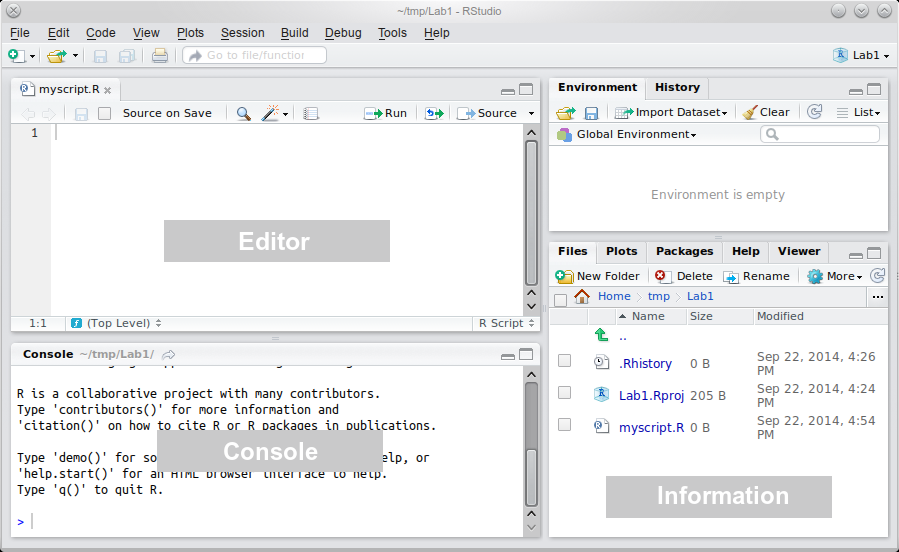

RStudio

RStudio is what is called an Integrated Development Environment for R.

It is dependent on R but separate from it.

There are several ways of using R but RStudio is arguably the most popular and convenient.

Let’s have a look at it.

The “heart of R” is the Console window.

This is where instructions are sent to R, and its responses are given.

The console is, almost exclusively, the way of talking to R in RStudio.

The Information area (all of the right-hand side of RStudio) shows you useful information about the state of your project.

At the moment, you can see some relevant files in the bottom pane, and an empty “Global Environment” at the top.

The global environment is a virtual storage of all objects you create in R.

So, for example, if you read in some data into R, this is where they will be put and where R will look for them if you tell it to manipulate or analyse the data.

Finally, the Editor is where you write more complicated scripts without having to run each command.

Each script, when saved, is just a text file with some added bells and whistles.

There’s nothing special about it.

Indeed, if you wanted to, you could write your script in any plain text editor, save it, change its extension from .txt to .R and open it in RStudio.

There is no need to do this but you could.

When you run such a script file, it gets interpreted by R in a line by line fashion.

This means that your data cleaning, processing, visualisation, and analysis needs to be written up in sequence otherwise R won’t be able to make sense of your script.

Since the script is basically a plain text file (with some nice colours added by RStudio to improve readability), the commands you type in can be edited, added, or deleted just like if you were writing an ordinary document.

You can run them again later, or build up complex commands and functions over several lines of text.

There is an important practical distinction between the Console and the Editor: In the Console, the Enter key runs the command. In the Editor, it just adds a new line. The purpose of this is to facilitate writing scripts without running each line of code. It also enables you to break down your commands over multiple lines so that you don’t end up with a line that’s hundreds of characters long. For example:

The hash (#) marks everything to the right of it as comment.

Comments are useful for annotating the code so that you can remember what it means when you return to your code months later (it will happen!).

It also improves code readability if you’re working on a script in collaboration with others.

Comments should be clear but also concise.

There is no point in paragraphs of verbose commentary.

Writing (and saving) scripts has just too many advantages over coding in the console to list and it it is crucial that you learn how to do it. It will enable you to write reproducible code you can rerun whenever needed, reuse chunks of code you created for a previous project in your analysis, and, when you realise you made a mistake somewhere (when, not if, because this too will happen!), you’ll be able to edit the code and recreate your analysis in a small fraction of the time it would take you to analyse your data anew without a script. This way, if you write a command and it doesn’t do exactly what you wanted to do, you can quickly tweak it in the editor and run it again. Also, if you accidentally modify and object and mess everything up, you can just re-run your entire script up to that point and pretend nothing ever happened. Or different still, let’s say you analysed your data and wrote your report and then you realised you made a mistake, for instance forgot to exclude data you should have excluded or excluded some you shouldn’t have. Without a “paper-trail” of your analysis, this is a very unpleasant (but, sadly, not unheard of) experience. But with a saved analysis script, you can just insert an additional piece of code in the file, re-run it and laugh it off. Or do the first two, and then take a long hard look at yourselfhatever the case, using the script editor is just very, very useful!

However, the aim is to keep the script tidy.

You don’t need to put every single line of code you ever run into it.

Sometimes, you just want to look at your data, using, for example View(df).

This kind of command really doesn’t need to be in your script.

As a general rule of thumb, use the editor for code that adds something of value to the sequence of the script (data cleaning, analysis, code generating plots, tables, etc.) and the console for one-off commands (when you want to just check something).

Here is an example of what a neat script looks like (part of the Reproducibility Project: Psychology analysis2). Compare it to your own scripts and try to find ways of improving your coding.

For useful “good practice” guidance on how to write legible code, see the Google style guide.

Basic principles of R programming

The devil’s in the detail

It takes a little practice to develop good coding habits.

As a result, you will likely get a lot of errors when you first try to do things in R.

That’s perfectly normal and the following the tips below will make it a lot better rather quickly.

Promise!

When you do encounter an error or when R does something other than what you wanted, it means that, somewhere along the way, there has been a mistake in at least one of the main components of the R language:

Vocabulary

Simply put, you used the wrong word and so R understood something other than what you intended.

Translated into a more programming language, you used the incorrect function to perform some operation or performed the right operation on the wrong input.

Maybe you wanted to calculate the median of a variable but instead, you calculated the mean.

Or maybe you calculated the median as you wanted but of the wrong variable.

Different still, you might have used the right function an the right object but R does not know the function because you haven’t made it accessible (more on this in the section on packages.

The former case is usually a matter of knowing the names of your functions, which comes with time.

The latter two are more of a matter of attention to detail.

Grammar

Grammatical mistakes basically consist of using the right vocabulary but using it wrong. This can be prevented by learning how to use the commands you want to use or at least knowing how to find out about them. For a more in-depth discussion, see the section on functions.

Syntax

The third pitfall consists in using the right words in the right way but stringing them together wrong.

Since programming languages are formal, things like order and placement of commas and brackets matter a great deal.

This is usually the source of most of the frustration caused by people’s early experience with R.

To avoid running into syntactic problems, try to always follow these principles:

- Every open bracket (

(,[,{) has to be closed at some point.()s are exclusively for functions.[]s are only for subsetting.{}s group together code we want to evaluate conditionally or iteratively or for writing your own functions.

- Commas are functional and have their place.

They are used to separate arguments in functions and dimensions in subsetting.

As such, they can only be used inside

()s and[]s. - White spaces are optional.

You are free (and indeed encouraged) to use them but, if you do, bear in mind they must not be inserted inside a name of a variable or function.

- For instance, there is a function called

as.numeric. There may not be a white space anywhere within this name.

- For instance, there is a function called

- Any unquoted (not surrounded by

's or"s) string of letters is interpreted as a name of some variable, dataset, or function. Conversely, any quoted string is interpreted literally as a meaningless string of characters.meanis a name of the function that computes the arithmetic mean (e.g.,mean(c(1, 3, 4, 8, 100))gives the mean of the numbers 1, 3, 4, 8, and 100) but"mean"is just a string of letters and performs no function inR.

Ris case sensitive –ais not the same asA.

Naturally, these guidelines won’t mean all that much to you if you are completely new to programming. That’s OK. Come back to them once you’ve finished reading this document. They will appear much more useful then.

If you want to keep it, put it in a box

Everything in life is merely transient; we ourselves are pretty ephemeral beings. (#sodeepbro)

However, R takes this quality and runs with it.

If you ask R to perform any operation, it will spew it out into the console and immediately forget it ever happened.

Let’s show you what that means:

# create an object (variable) a and assign it the value of 1

a <- 1

# increment a by 1

a + 1

[1] 2# OK, now see what the value of a is

a

[1] 1

So, R as if forgot we asked it to do a + 1 and didn’t change its value.

The only way to keep this new value is to put it in an object.

b <- a + 1

# now let's see

b

[1] 2

Think of objects as boxes.

The names of the objects are only labels.

Just like with boxes, it is convenient to label boxes in a way that is indicative of their contents, but the label itself does not determine the content.

Sure, you can create an R object called one and store the value of 2 in it, if you wish.

But you might want to think about whether or not it is a helpful name.

And what kind of person that makes you…

Objects can contain anything at all: values, vectors, matrices, data, graphs, tables, even code.

In fact, every time you call a function, e.g., mean(), you are running the code that’s inside the object mean with whatever values you pass to the arguments of the function.

Let’s demonstrate this last point:

# let's create a vector of numbers the mean of which we want to calculate

vec <- c(103, 1, 1, 6, 3, 43, 2, 23, 7, 1)

# see what's inside

vec

[1] 103 1 1 6 3 43 2 23 7 1# let's get the mean

# mean is the sum of all values divided by the number of values

sum(vec)/length(vec)

[1] 19# good, now let's create a function that calculates

# the mean of whatever we ask it to

function(x) {sum(x)/length(x)}

function(x) {sum(x)/length(x)}# but as we discussed above, R immediately forgot about the function

# so we need to store it in a box (object) to keep it for later!

calc.mean <- function(x) {sum(x)/length(x)}

# OK, all ready now

calc.mean(x = vec)

[1] 19# the code inside the object calc.mean is reusable

calc.mean(x = c(3, 5, 53, 111))

[1] 43# to show that calc.mean is just an object with some code in it,

# you can look inside, just like with any other object

calc.mean

function(x) {sum(x)/length(x)}

<bytecode: 0x0000000019992618>

Let this be your mantra: “If I want to keep it for later, I need to put it in an object so that is doesn’t go off.”

You can’t really change an object

Unlike in the physical world, objects in R cannot truly change.

The reason is that, sticking to our analogy, these objects are kind of like boxes.

You can put stuff in, take stuff out and that’s pretty much it.

However, unlike boxes, when you take stuff out of objects, you only take out a copy of its contents.

The original contents of the box remain intact.

Of course you can do whatever you want (within limits) to the stuff once you’ve taken it out of the box but you are only modifying the copy.

And unless you put that modified stuff into a box, R will forget about it as soon as it’s done with it.

Now, as you probably know, you can call the boxes whatever you want (again, within certain limits).

What might not have occurred to you though, is that you can call the new box the same as the old one.

When that happens, R basically takes the label off the old box, pastes it on the new one and burns the old box.

So even though some operations in R may look like they change objects, under the hood R copies their content, modifies it, stores the result in a different object puts the same label on it and discards the original object.

Understanding this mechanism will make things much easier!

Putting the above into practice, this is how you “change” an R object:

# put 1 into an object (box) called a

a <- 1

# copy the content of a, add 1 to it and store it in an object b

b <- a + 1

# copy what's inside b and put it in a new object called a

# discarding the old object a

a <- b

# now see what's inside of a

# (by copying its content and pasting it in the console)

a

[1] 2

Of course, you can just cut out the middleman (object b).

So to increment a by another 1, we can do:

a <- a + 1

a

[1] 3

It’s elementary, my dear Watson

When it comes to data, every vector, matrix, list, data frame - in other words, every structure - is composed of elements.

An element is a single number, boolean (TRUE/FALSE), or a character string (anything in “quotes”).

Elements come in several classes:

"numeric", as the name suggests, a numeric element is a single number: 1, 2, -725, 3.14159265, etc.. A numeric element is never in ‘single’ or “double” quotesumbers are cool because you can do a lot of maths (and stats!) with them."character", a string of characters, no matter how long. It can be a single letter,'g', but it can equally well be a sentence,“Elen síla lumenn’ omentielvo.”(if you want the string to contain any single quotes, use double quotes to surround the string with and vice versa). Notice that character strings inRare always in ‘single’ or “double” quotes. Conversely anything in quotes is a character string:It stands to reason that you can’t do any maths with cahracter strings, not even if it’s a number that’s inside the quotes!

"3" + "2"Error in "3" + "2": non-numeric argument to binary operator"logical", a logical element can take one of two values,TRUEorFALSE. Logicals are usually the output of logical operations (anything that can be phrased as a yes/no question, e.g., is x equal to y?). In formal logic,TRUEis represented as 1 andFALSEas 0. This is also the case inR:# recall that c() is used to bind elements into a vector # (that's just a fancy term for an ordered group of elements) class(c(TRUE, FALSE))[1] "logical"# we can force ('coerce', in R jargon) the vector to be numeric as.numeric(c(TRUE, FALSE))[1] 1 0This has interesting implications. First, is you have a logical vector of many

TRUEs andFALSEs, you can quickly count the number ofTRUEs by just taking the sum of the vector:# consider vector of 50 logicals x[1] FALSE FALSE TRUE TRUE FALSE FALSE TRUE FALSE FALSE TRUE TRUE [12] FALSE TRUE TRUE TRUE FALSE TRUE TRUE TRUE TRUE FALSE TRUE [23] TRUE TRUE FALSE FALSE FALSE TRUE TRUE TRUE TRUE TRUE FALSE [34] FALSE FALSE TRUE TRUE TRUE TRUE FALSE TRUE TRUE TRUE TRUE [45] FALSE TRUE TRUE TRUE TRUE FALSE# number of TRUEs sum(x)[1] 32[1] 18Second, you can perform all sorts of arithmetic operations on logicals:

# TRUE/FALSE can be shortened to T/F T + T[1] 2F - T[1] -1(T * T) + F[1] 1Third, you can coerce numeric elements to valid logicals:

# zero is FALSE as.logical(0)[1] FALSE# everything else is TRUE as.logical(c(-1, 1, 12, -231.3525))[1] TRUE TRUE TRUE TRUENow, you may wonder that use this can possible be? Well, this way you can perform basic logical operations, such as AND, OR, and XOR (see section “Handy functions that return logicals” below):

# x * y is equivalent to x AND y as.logical(T * T)[1] TRUEas.logical(T * F)[1] FALSEas.logical(F * T)[1] FALSEas.logical(F * F)[1] FALSE# x + y is equivalent to x OR y as.logical(T + T)[1] TRUEas.logical(T + F)[1] TRUEas.logical(F + T)[1] TRUEas.logical(F + F)[1] FALSE# x - y is equivalent to x XOR y (eXclusive OR, either-or) as.logical(T - T)[1] FALSEas.logical(T - F)[1] TRUEas.logical(F - T)[1] TRUEas.logical(F - F)[1] FALSE"factor", factors are a bit weird. They are used mainly for tellingRthat a vector represents a categorical variable. For instance, you can be comparing two groups, treatment and control.# create a vector of 15 "control"s and 15 "treatment"s # rep stands for 'repeat', which is exactly what the function does x <- rep(c("control", "treatment"), each = 15) x[1] "control" "control" "control" "control" "control" [6] "control" "control" "control" "control" "control" [11] "control" "control" "control" "control" "control" [16] "treatment" "treatment" "treatment" "treatment" "treatment" [21] "treatment" "treatment" "treatment" "treatment" "treatment" [26] "treatment" "treatment" "treatment" "treatment" "treatment"# turn x into a factor x <- as.factor(x) x[1] control control control control control control [7] control control control control control control [13] control control control treatment treatment treatment [19] treatment treatment treatment treatment treatment treatment [25] treatment treatment treatment treatment treatment treatment Levels: control treatmentThe first thing to notice is the line under the last printout that says “

Levels: control treatment”. This informs you thatxis now a factor with two levels (or, a categorical variable with two categories).Second thing you should take note of is that the words

controlandtreatmentdon’t have quotes around them. This is another wayRuses to tell you this is a factor.With factors, it is important to understand how they are represented in

R. Despite, what they look like, under the hood, they are numbers. A one-level factor is a vector of1s, a two-level factor is a vector of1s and2s, a n-level factor is a vector of1s,2s,3s … ns. The levels, in our casecontrolandtreatment, are just labels attached to the1s and2s. Let’s demonstrate this:typeof(x)[1] "integer"# integer is fancy for "whole number" # we can coerce factors to numeric, thus stripping the labels as.numeric(x)[1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2# see the labels levels(x)[1] "control" "treatment"The labels attached to the numbers in a factor can be whatever. Let’s say that in your raw data file, treatment group is coded as 1 and control group is coded as 0.

Since

xis now a factor with levels0and1, we know that it is stored inRas a vector of1s and2s and the zeros and ones, representing the groups, are only labels:The fact that factors in

Rare represented as labelled integers has interesting implications some of you have already come across. First, certain functions will coerce factors into numeric vectors which can shake things up. This happened when you usedcbind()on a factor with levels0and1:x[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 Levels: 0 1# let's bind the first 15 elements and the last 15 elements together as columnscbind(x[1:15], x[16:30])[,1] [,2] [1,] 1 2 [2,] 1 2 [3,] 1 2 [4,] 1 2 [5,] 1 2 [ reached getOption("max.print") -- omitted 10 rows ]# printout truncated to first 5 rows to save spacecbind()binds the vectors you provide into the columns of a matrix. Since matrices (yep, that’s the plural of ‘matrix’; also, more on matrices later) can only containlogical,numeric, andcharacterelements, thecbind()function coerces the elements of thexfactor (haha, the X-factor) intonumeric, stripping the labels and leaving only1s and2s.The other two consequences of this labelled numbers system stem from the way the labels are stored. Every

Robject comes with a list of so called attributes attached to it. These are basically information about the object. For objects of classfactor, the attributes include its levels (or the labels attached to the numbers) and class:attributes(x)$levels [1] "0" "1" $class [1] "factor"So the labels are stored separately of the actual elements. This means, that even if you delete some of the numbers, the labels stay the same. Let’s demonstrate this implication on the

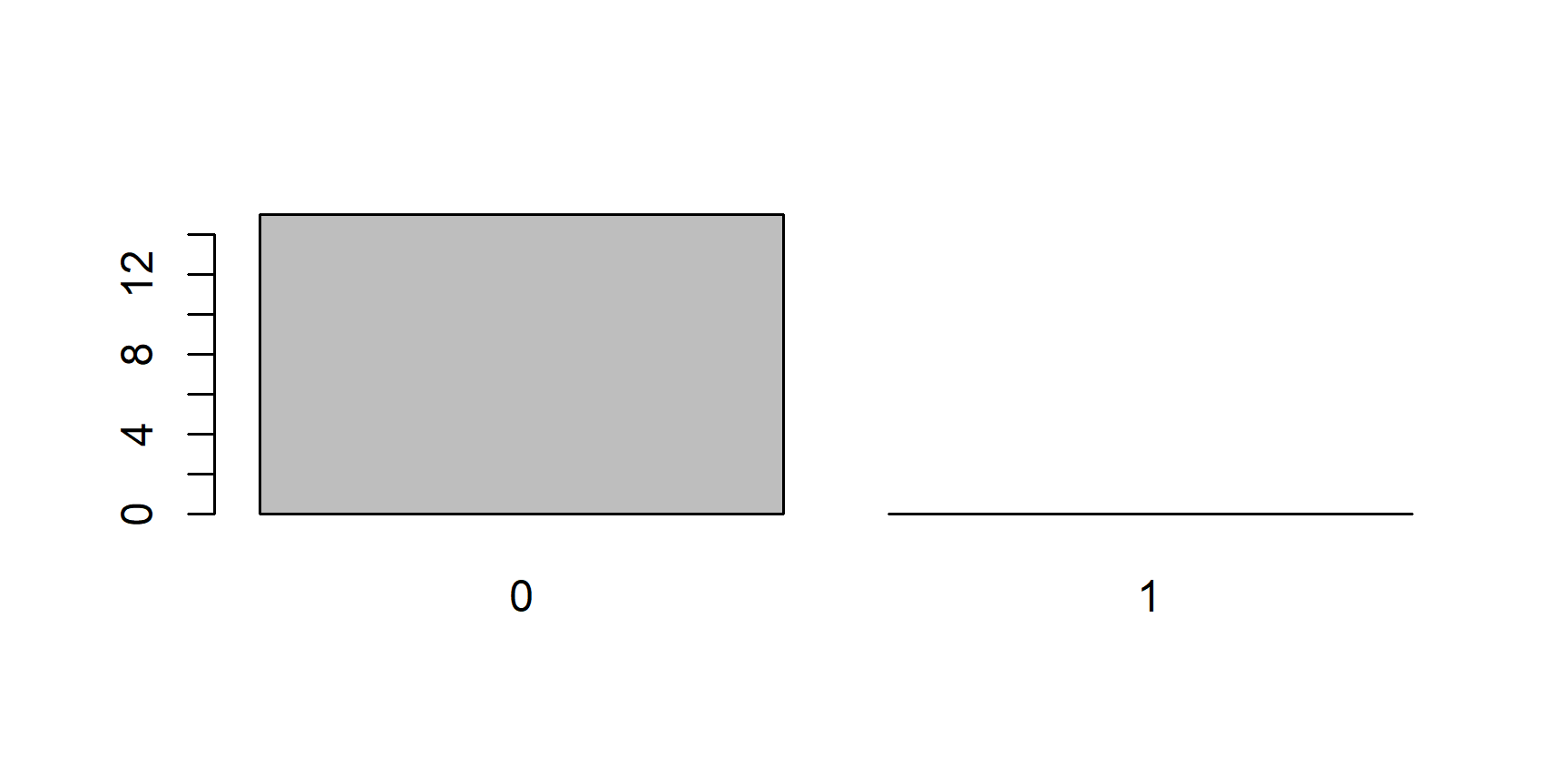

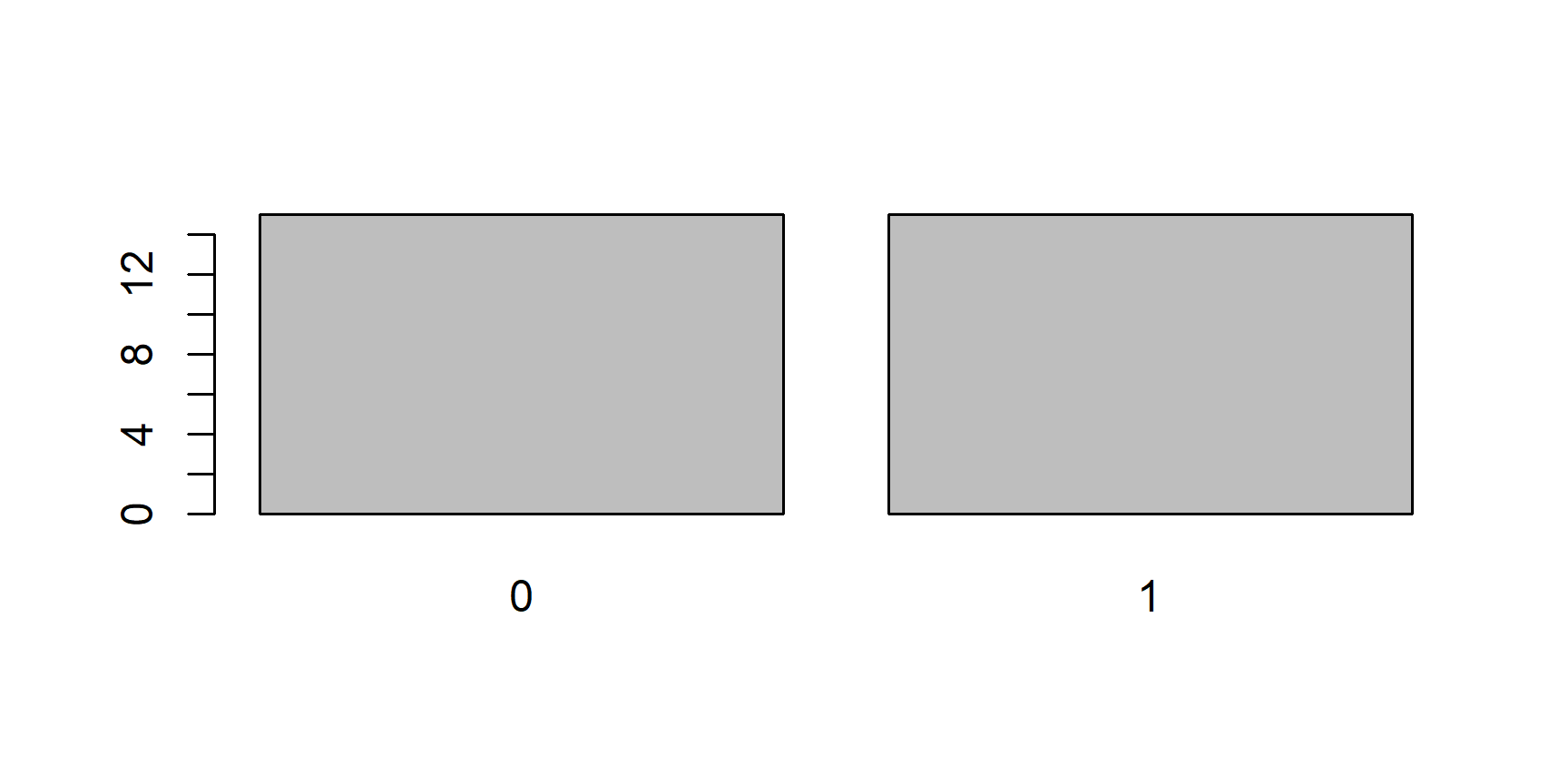

plot()function. This function is smart enough to know that if you give it a factor it should plot it using a bar chart, and not a histogram or a scatter plot:plot(x)

Now, let’s take the first 15 elements of

x, which are all0s and plot them:Even though our new object

yonly includes0s, thelevelsattribute still tellsRthat this is a factor of (at least potentially) two levels:"0"and"1"and soplot()leaves a room for the1s.The last consequence is directly related to this. Since the levels of an object of class

factorare stored as its attributes, any additional values put inside the objects will be invalid and turned intoNAs (Rwill warn us of this). In other words, you can only add those values that are among the ones produced bylevels()to an object of classfactor:# try adding invalid values -4 and 3 to the end of vector x x[31:32] <- c(-4, 3) x[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 [14] 0 0 1 1 1 1 1 1 1 1 1 1 1 [27] 1 1 1 1 <NA> <NA> Levels: 0 1The only way to add these values to a factor is to first coerce it to

numeric, then add the values, and then turn it back intofactor:# coerce x to numeric x <- as.numeric(x[1:30]) class(x)[1] "numeric"# but remember that 0s and 1s are now 1s and 2s! x[1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2# so subtract 1 to make the values 0s and 1s again x <- x - 1 # add the new values x <- c(x, -4, 3) # back into fractor x <- as.factor(x) x[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 [23] 1 1 1 1 1 1 1 1 -4 3 Levels: -4 0 1 3# SUCCESS! # reset x <- as.factor(rep(0:1, each = 15)) # one-liner x <- as.factor(c(as.numeric(x[1:30]) - 1, -4, 3)) x[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 [23] 1 1 1 1 1 1 1 1 -4 3 Levels: -4 0 1 3Told you factors were weird…

"ordered", finally, these are the same as factors but, in addition to having levels, these levels are ordered and thus allow comparison (notice theLevels: 0 < 1below):# coerce x to numeric x <- as.ordered(rep(0:1, each = 15)) x[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 Levels: 0 < 1# we can now compare the levels x[1] < x[30][1] TRUE[1] NAObjects of class

orderedare useful for storing ordinal variables, e.g., age group.

In addition to these five sorts of elements, there are three special wee snowflakes:

NA, stands for “not applicable” and is used for missing data. Unlike other kinds of elements, it can be bound into a vector along with elements of any class.NaN, stands for “not a number”. It is technically of classnumericbut only occurs as the output of invalid mathematical operations, such as dividing zero by zero or taking a square root of a negative number:Inf(or-Inf), infinity. Reserved for division of a non-zero number by zero (no, it’s not technically right):235/0[1] Inf-85.123/0[1] -Inf

Data structures

So that’s most of what you need to know about elements. Let’s talk about putting elements together. As mentioned above, elements can be grouped in various data structures. These differ in the ways in which they arrange elements:

vectors arrange elements in a line. they don’t have dimensions and can only contain elements of same class (e.g.,

"numeric","character","logical").# a vector letters[5:15][1] "e" "f" "g" "h" "i" "j" "k" "l" "m" "n" "o"If you try to force elements of different classes to a single vector, they will all be converted to the most complex class. The order of complexity, from least to most complex, is:

logical,numeric, andcharacter. Elements of classfactorandorderedcannot be meaningfully bound in a vector with other classes (nor with each other): they either get converted tonumeric,character- if you’re lucky - or toNA.# c(logical, numeric) results in numeric x <- c(T, F, 1:6) x[1] 1 0 1 2 3 4 5 6class(x)[1] "integer"# integer is like numeric but only for whole numbers to save computer memory # adding character results in character x <- c(x, "foo") # the numbers 1-6 are not numeric any more! x[1] "1" "0" "1" "2" "3" "4" "5" "6" "foo"class(x)[1] "character"matrices arrange elements in a square/rectangle, i.e., a two-dimensional arrangement of rows and columns. They can also only accommodate elements of the same class and cannot store attributes of elements. That means, you can’t use them to store (ordered) factors.

[,1] [,2] [,3] [,4] [,5] [1,] 1.055165 -0.1471338 0.3655892 1.4756397 1.2200063 [2,] -0.312205 1.3333199 2.0918949 -0.2479756 1.9722208 [3,] 2.900048 0.3400205 0.8601729 -1.8949090 1.6148427 [4,] 0.253823 -0.1885970 0.3777971 2.1995795 -0.2421898[1] 0 1 0 1 1 1 1 0 1 1 Levels: 0 1# not factors any more! matrix(x, ncol = 5)[,1] [,2] [,3] [,4] [,5] [1,] "0" "0" "1" "1" "1" [2,] "1" "1" "1" "0" "1"lists arrange elements in a collection of vectors or other data structures. Different vectors/structures can be of different lengths and contain elements of different classes. Elements of lists and, by extension, data frames can be accessed using the

$operator, provided we gave them names.# a list my_list <- list( # 1st element of list is a numeric matrix A = matrix(rnorm(20, 1, 1), ncol = 5), # 2nd element is a character vector B = letters[1:5], # third is a data.frame C = data.frame(x = c(1:3), y = LETTERS[1:3]) ) my_list$A [,1] [,2] [,3] [,4] [,5] [1,] 0.76931823 -1.08618490 0.9681657 1.4600036 0.4959855 [2,] 0.72052941 0.88221429 0.1313416 -0.4708397 1.6079313 [3,] 1.59016176 -0.05850948 1.6047298 2.3353268 2.0576108 [4,] -0.07917997 -0.44227655 1.7916259 1.1658096 1.7367261 $B [1] "a" "b" "c" "d" "e" $C x y 1 1 A 2 2 B 3 3 C# we can use the $ operator to access NAMED elements of lists my_list$B[1] "a" "b" "c" "d" "e"# this is also true for data frames my_list$C$x[1] 1 2 3# but not for vectors or matrices my_list$A$1Error: <text>:2:11: unexpected numeric constant 1: # but not for vectors or matrices 2: my_list$A$1 ^data frames are lists but have an additional constraint: all the vectors of a

data.framemust be of the same length. That is the reasons why your datasets are always rectangular.arrays are matrices of n number of dimensions. Matrix is always a square/rectangle. An

arraycan also be a cube, or a higher dimensional structure. Admittedly, this is tricky to visualise but it is true., , 1 [,1] [,2] [,3] [,4] [,5] [1,] 2.5976880 1.5184335 -0.1606104 1.1669416 -0.7400089 [2,] 1.2160238 1.0783854 0.7216185 1.1826940 1.7860680 [3,] 0.4402993 -0.5678174 -0.4668042 1.6195435 1.8004761 [4,] 1.4861099 1.3684519 -1.0449207 -0.1886814 1.5040799 , , 2 [,1] [,2] [,3] [,4] [,5] [1,] 1.1476941 1.11917744 -0.1776244 2.72380113 -0.08240104 [2,] 1.2684743 1.29151023 1.7625905 1.02446040 1.31825629 [3,] 0.2606121 0.11962100 2.1697031 -1.32070330 2.55588637 [4,] -0.7122300 0.03364262 -0.6044303 -0.07403524 2.94666277 , , 3 [,1] [,2] [,3] [,4] [,5] [1,] 2.1113354 2.7328277 0.7931835 1.08066355 0.9086148 [2,] -0.8837650 -0.9711982 0.6291291 0.27657309 2.6004920 [3,] -0.1937936 0.7128997 0.1227985 1.30140982 1.2998969 [4,] 1.2716775 0.9521639 1.2071021 0.05938047 0.1566912

Different data structures are useful for different things but bear in mind that, ultimately, they are all just bunches of elements. This understanding is crucial for working with data.

There are only three ways to ask for elements

Now that you understand that all data boil down to elements, let’s look at how to ask R for the elements you want.

As the section heading suggests, there are only three ways to do this:

- indices

- logical vector

- names (only if elements are named, usually in lists and data frames)

Let’s take a closer look at these ways one at a time.

Indices

The first way to ask for an element is to simply provide the numeric position of the desired element in the structure (vector, list…) in a set of square brackets [] at the end of the object name:

x <- c("I", " ", "l", "o", "v", "e", " ", "R")

# get the 6th element

x[6]

[1] "e"

To get more than just one element at a time, you need to provide a vector of indices.

For instance, to get the elements 3-6 of x, we can do:

Remember that some structures can contain as their elements other structures.

For example asking for the first element of my_list will return:

my_list[1]

$A

[,1] [,2] [,3] [,4] [,5]

[1,] 0.76931823 -1.08618490 0.9681657 1.4600036 0.4959855

[2,] 0.72052941 0.88221429 0.1313416 -0.4708397 1.6079313

[3,] 1.59016176 -0.05850948 1.6047298 2.3353268 2.0576108

[4,] -0.07917997 -0.44227655 1.7916259 1.1658096 1.7367261

The $A at the top of the output indicates that we have accessed the element A of my_list but not really accessed the matrix itself.

Thus, at this stage, we wouldn’t be able to ask for its elements.

To access the matrix contained in my_list$A, we need to write either exactly that, or use double brackets:

my_list[[1]]

[,1] [,2] [,3] [,4] [,5]

[1,] 0.76931823 -1.08618490 0.9681657 1.4600036 0.4959855

[2,] 0.72052941 0.88221429 0.1313416 -0.4708397 1.6079313

[3,] 1.59016176 -0.05850948 1.6047298 2.3353268 2.0576108

[4,] -0.07917997 -0.44227655 1.7916259 1.1658096 1.7367261# with the $A now gone from output, we can access the matrix itself

my_list[[1]][1]

[1] 0.7693182

As discussed above, some data structures are dimensionless (vectors, lists), while others are arranged in n-dimensional rectangles (where n > 1).

When indexing/subsetting elements of dimensional structures, we need to provide coordinates of the elements for each dimension.

This is done by providing n numbers or vectors in the []s separated by a comma.

A matrix, for instance has 2 dimensions, rows and columns.

The first number/vector in the []s represents rows and the second columns.

Leaving either position blank will return all rows/columns:

mat <- matrix(LETTERS[1:20], ncol = 5)

mat

[,1] [,2] [,3] [,4] [,5]

[1,] "A" "E" "I" "M" "Q"

[2,] "B" "F" "J" "N" "R"

[3,] "C" "G" "K" "O" "S"

[4,] "D" "H" "L" "P" "T" # blank spaces technically not needed but improve code readability

mat[1, ] # first row

[1] "A" "E" "I" "M" "Q"mat[ , 1] # first column

[1] "A" "B" "C" "D"mat[c(2, 4), ] # rows 2 and 4, notice the c()

[,1] [,2] [,3] [,4] [,5]

[1,] "B" "F" "J" "N" "R"

[2,] "D" "H" "L" "P" "T" mat[c(2, 4), 1:3] # elements 2 and 4 of columns 1-3

[,1] [,2] [,3]

[1,] "B" "F" "J"

[2,] "D" "H" "L"

To get the full matrix, we simply type its name. However, you can think of the same operation as asking for all rows and all columns of the matrix:

mat[ , ] # all rows, all columns

[,1] [,2] [,3] [,4] [,5]

[1,] "A" "E" "I" "M" "Q"

[2,] "B" "F" "J" "N" "R"

[3,] "C" "G" "K" "O" "S"

[4,] "D" "H" "L" "P" "T"

The same is the case with data frames:

df <- data.frame(id = LETTERS[1:6],

group = rep(c("Control", "Treatment"), each = 3),

score = rnorm(6, 100, 20))

df

id group score

1 A Control 90.98199

2 B Control 97.49292

3 C Control 102.92184

4 D Treatment 98.79165

5 E Treatment 87.10690

6 F Treatment 88.11209df[1, ] # first row

id group score

1 A Control 90.98199df[4:6, c(1, 3)]

id score

4 D 98.79165

5 E 87.10690

6 F 88.11209

This [row, column] system can be extended to multiple dimensions.

As we said above, arrays can have n dimensions and so we need n positions in the []s to ask for elements:

, , 1

[,1] [,2] [,3] [,4] [,5]

[1,] 1.7707501 0.7524663 1.425150 0.49733345 0.4870223

[2,] 1.1383839 0.6776926 0.403361 1.50783347 1.2864144

[3,] 0.2674704 1.4581483 2.251834 0.09147882 1.8825147

[4,] 2.5134044 0.2891996 1.171600 0.87606133 0.1450124

, , 2

[,1] [,2] [,3] [,4] [,5]

[1,] 1.9835903 0.5433640 0.6031648 0.6784301 -0.1070031

[2,] 0.9048236 -0.1387803 0.7941896 1.3382661 1.8920177

[3,] -0.1375305 0.1514546 1.0429116 2.0954125 2.3287705

[4,] 0.7669415 2.4522680 1.8792214 0.1113503 2.3916659

, , 3

[,1] [,2] [,3] [,4] [,5]

[1,] 2.45591438 -0.2668876 2.6124300 -1.2737003 1.7063435

[2,] -0.04572805 -0.8134289 1.5681852 1.0479657 -1.4018941

[3,] 1.14437886 2.1435908 1.6348806 1.4219561 0.6546685

[4,] 1.21282827 -0.7582147 0.8400534 0.9377301 -0.4833443arr_3d[1, , ] # first row-slice

[,1] [,2] [,3]

[1,] 1.7707501 1.9835903 2.4559144

[2,] 0.7524663 0.5433640 -0.2668876

[3,] 1.4251497 0.6031648 2.6124300

[4,] 0.4973334 0.6784301 -1.2737003

[5,] 0.4870223 -0.1070031 1.7063435arr_3d[1, 2:4, 3] # elements in row 1 of columns 2-4 in the 3rd space of the 3rd dimension

[1] -0.2668876 2.6124300 -1.2737003

While 3D arrays can still be visualised as cubes (or rectangular cuboids), higher-n-dimensional arrays are trickier.

For instance, a 4D array would have a separate cuboid in every position of [x, , , ], [ , y, , ], [\,\,\z,\], and[\,\,\,\q]`.

You are not likely to come across 3+ dimensional arrays but it is good to understand that, in principle, they are just extensions of 2D arrays (matrices) and thus same rules apply.

Take home message: when using indices to ask for elements, remember that to request more than one, you need to give a vector of indices (i.e., numbers bound in a c()).

Also remember that some data structures need you to specify dimensions separated by a comma (most often just rows and columns for matrices and data frames).

Logical vectors

The second way of asking for elements is by putting a vector of logical (AKA Boolean) values in the []s.

An important requirement here is that the vector must be the same length as the one being subsetted.

So, for a vector with three elements, we need to provide three logical values, TRUE for “I want this one” and FALSE for “I don’t want this one”.

Let’s demonstrate this on the same vector we used for indices:

All the other principles we talked about regarding indexing apply also to logical vectors. Note also, that higher 2D structures need a logical row vector and a logical column vector:

# recall our mat

mat

[,1] [,2] [,3] [,4] [,5]

[1,] "A" "E" "I" "M" "Q"

[2,] "B" "F" "J" "N" "R"

[3,] "C" "G" "K" "O" "S"

[4,] "D" "H" "L" "P" "T" # rows 2 and 4

mat[c(T, F, T, F), ]

[,1] [,2] [,3] [,4] [,5]

[1,] "A" "E" "I" "M" "Q"

[2,] "C" "G" "K" "O" "S" [1] "D" "H"# you can even COMBINE the two ways!

mat[4, c(T, T, F, F, F)]

[1] "D" "H"

And as if vectors weren’t enough, you can even use matrices of logical values to subset matrices and data frames:

[,1] [,2] [,3]

[1,] FALSE FALSE FALSE

[2,] FALSE FALSE FALSE

[3,] FALSE FALSE FALSE

[4,] TRUE FALSE TRUE

[5,] TRUE FALSE TRUE

[6,] TRUE FALSE TRUEdf[mat_logic]

[1] "D" "E" "F" " 98.79165" " 87.10690"

[6] " 88.11209"

Notice, however, that the output is a vector so two things happened: first, the rectangular structure has been erased and second, since vectors can only contain elements of the same class (see above), the numbers got converted into character strings (hence the ""s).

Nevertheless, this method of subsetting using logical matrices can be useful for replacing several values in different rows and columns with another value:

# replace with NAs

df[mat_logic] <- NA

df

id group score

1 A Control 90.98199

2 B Control 97.49292

3 C Control 102.92184

4 <NA> Treatment NA

5 <NA> Treatment NA

6 <NA> Treatment NA

To use a different example, take the function lower.tri().

It can be used to subset a matrix in order to get the lower triangle (with or without the diagonal).

Consider matrix mat2 which has "L"s in its lower triangle, "U"s in its upper triangle, and "D"s on the diagonal:

[,1] [,2] [,3] [,4]

[1,] "D" "U" "U" "U"

[2,] "L" "D" "U" "U"

[3,] "L" "L" "D" "U"

[4,] "L" "L" "L" "D"

Let’s use lower.tri() to ask for the elements in its lower triangle:

Adding the , diag = T will return the lower triangle along with the diagonal:

mat2[lower.tri(mat2, diag = T)]

[1] "D" "L" "L" "L" "D" "L" "L" "D" "L" "D"# we got only "L"s and "D"s

So what does the function actually do? What is this sorcery? Let’s look at the output of the function:

lower.tri(mat2)

[,1] [,2] [,3] [,4]

[1,] FALSE FALSE FALSE FALSE

[2,] TRUE FALSE FALSE FALSE

[3,] TRUE TRUE FALSE FALSE

[4,] TRUE TRUE TRUE FALSESo the function produces a matrix of logicals, the same size as out mat2, with TRUEs in the lower triangle and FALSEs elsewhere.

What we did above is simply use this matrix to subset mat2[].

If you’re curious how the function produces the logical matrix then, first of all, that’s great, keep it up and second, you can look at the code wrapped in the lower.tri object (since functions are only objects of a special kind with code inside instead of data):

lower.tri

function (x, diag = FALSE)

{

x <- as.matrix(x)

if (diag)

row(x) >= col(x)

else row(x) > col(x)

}

<bytecode: 0x0000000015a39ab0>

<environment: namespace:base>Right, let’s see.

If we set the diag argument to TRUE the function returns row(x) >= col(x).

If we leave it set to FALSE (default), it returns row(x) > col(x).

Let’s substitute x for our mat2 and try it out:

row(mat2)

[,1] [,2] [,3] [,4]

[1,] 1 1 1 1

[2,] 2 2 2 2

[3,] 3 3 3 3

[4,] 4 4 4 4col(mat2)

[,1] [,2] [,3] [,4]

[1,] 1 2 3 4

[2,] 1 2 3 4

[3,] 1 2 3 4

[4,] 1 2 3 4 [,1] [,2] [,3] [,4]

[1,] TRUE FALSE FALSE FALSE

[2,] TRUE TRUE FALSE FALSE

[3,] TRUE TRUE TRUE FALSE

[4,] TRUE TRUE TRUE TRUE [1] "D" "L" "L" "L" "D" "L" "L" "D" "L" "D" [,1] [,2] [,3] [,4]

[1,] FALSE FALSE FALSE FALSE

[2,] TRUE FALSE FALSE FALSE

[3,] TRUE TRUE FALSE FALSE

[4,] TRUE TRUE TRUE FALSE[1] "L" "L" "L" "L" "L" "L"

MAGIC!

Take home message: When subsetting using logical vectors, the vectors must be the same length as the vectors you are subsetting. The same goes for logical matrices: they must be the same size as the matrix/data frame you are subsetting.

Complementary subsetting

Both of the aforementioned ways of asking for subsets of data can be inverted.

For indices, you can simply put a - sign before the vector:

# elements 3-6 of x

x[3:6]

[1] "l" "o" "v" "e"# invert the selection

x[-(3:6)]

[1] "I" " " " " "R"#equivalent to

x[c(1, 2, 7, 8)]

[1] "I" " " " " "R"

For logical subsetting, you need to negate the values.

That is done using the logical negation operator ‘!’ (AKA “not”):

y <- T

y

[1] TRUE# negation

!y

[1] FALSE# also works for vectors and matrices

mat_logic

[,1] [,2] [,3]

[1,] FALSE FALSE FALSE

[2,] FALSE FALSE FALSE

[3,] FALSE FALSE FALSE

[4,] TRUE FALSE TRUE

[5,] TRUE FALSE TRUE

[6,] TRUE FALSE TRUE!mat_logic

[,1] [,2] [,3]

[1,] TRUE TRUE TRUE

[2,] TRUE TRUE TRUE

[3,] TRUE TRUE TRUE

[4,] FALSE TRUE FALSE

[5,] FALSE TRUE FALSE

[6,] FALSE TRUE FALSEdf[!mat_logic]

[1] "A" "B" "C" "Control" "Control"

[6] "Control" "Treatment" "Treatment" "Treatment" " 90.98199"

[11] " 97.49292" "102.92184"

Names - $ subsetting

We already mentioned the final way of subsetting elements when we talked about lists and data frames.

To subset top-level elements of a named list or columns of a data frame, we can use the $ operator.

df$group

[1] "Control" "Control" "Control" "Treatment" "Treatment"

[6] "Treatment"What we get is a single vector that can be further subsetted using indices or logical vectors:

df$group[c(3, 5)]

[1] "Control" "Treatment"

Bear in mind that all data cleaning and transforming ultimately boils down to using one, two, or all of these three ways of subsetting elements!

Think of commands in terms of their output

In order to be able to manipulate your data, you need to understand that any chunk of code is just a formal representation of what the code is supposed to be doing, i.e., its output.

That means that you are free to put code inside []s but only so long as the output of the code is either a numeric vector (of valid values - you cannot ask for x[c(3, 6, 7)] if x has only six elements) or a logical vector/matrix of the same length/size as the object that is being subsetted.

Put any other code inside []s and R will return an error (or even worse, quietly produce some unexpected behaviour)!

So the final point we would like to stress is that you need to…

Know what to expect

You should not be surprised by the outcome of R.

If you are, that means you do not entirely understand what you asked R to do.

A good way to practice this understanding is to tell yourself what form of output and what values you expect a command to return.

For instance, in the code above, we did x[-(3:6)].

Ask yourself what does the -(3:6) return.

How and why is it different from -3:6?

What will happen if you do x[-3:6]?

-(3:6)

[1] -3 -4 -5 -6-3:6

[1] -3 -2 -1 0 1 2 3 4 5 6x[-3:6]

Error in x[-3:6]: only 0's may be mixed with negative subscriptsIf any of the output above surprised you, try to understand why. What were your expectations? Do you now, having seen the actual output, understand what those commands do?

Some of you were wondering why, when replacing values in column 1 of matrix mat that are larger than, say, 2 with NAs, you had to specify the column several times, e.g.:

mat[mat[ , 1] > 2, 1] <- NA

## 2 instances of mat[ , 1] in total:

# 1.

outer

mat[..., 1]

# 2.

comparison

mat[ , 1] > ...

Let’s consider matrix mat:

[,1] [,2] [,3] [,4] [,5]

[1,] -0.4821663 -1.235421 0.09837657 0.2507154 1.58974540

[2,] 0.8314073 -1.728620 1.31394902 -0.9550187 -1.08255274

[3,] 2.4322500 -1.426386 0.56463977 0.3002515 0.63186928

[4,] 0.8201293 -1.051542 -1.55258377 0.2782249 -1.58621500

[5,] -1.3918933 1.410444 1.20531294 -0.2560778 -0.05314628

[,6]

[1,] -0.2969522

[2,] -0.3459194

[3,] 0.6151551

[4,] -0.2604405

[5,] 0.6354582and think of the command in terms of the expected outcome of its constituent elements.

The logical operator ‘>’ returns a logical vector corresponding to the answer to the question “is the value to the left of the operator larger than that to the right of the operator?” The answer can only be TRUE or FALSE.

So mat[ , 1] > 2 will return:

[1] FALSE FALSE TRUE FALSE FALSEThere is no way of knowing that these values correspond to the 1st column of mat just from the output alone. That information has been lost.

This means that, if we type mat[mat[ , 1] > 2, ], we are passing a vector of T/Fs to the row position of the []s.

The logical vector itself contains no information about it coming from a comparison of the 1st row of mat to the value of 2.

So R can only understand the command as mat[c(FALSE, FALSE, TRUE, FALSE, FALSE), ] and will try to recycle the vector FALSE, FALSE, TRUE, FALSE, FALSE for every column of mat:

mat[mat[ , 1] > 2, ]

[1] 2.4322500 -1.4263864 0.5646398 0.3002515 0.6318693 0.6151551

If you want to only extract values from mat[ , 1] that correspond to the TRUEs, you must tell R that, hence the apparent (but not actual) repetition in mat[mat[ , 1] > 2, 1].

mat[mat[ , 1] > 2, 1] <- NA

mat

[,1] [,2] [,3] [,4] [,5]

[1,] -0.4821663 -1.235421 0.09837657 0.2507154 1.58974540

[2,] 0.8314073 -1.728620 1.31394902 -0.9550187 -1.08255274

[3,] NA -1.426386 0.56463977 0.3002515 0.63186928

[4,] 0.8201293 -1.051542 -1.55258377 0.2782249 -1.58621500

[5,] -1.3918933 1.410444 1.20531294 -0.2560778 -0.05314628

[,6]

[1,] -0.2969522

[2,] -0.3459194

[3,] 0.6151551

[4,] -0.2604405

[5,] 0.6354582

This feature might strike some as redundant but it is actually the only sensible way.

The fact that R is not trying to guess what columns of the data you are requesting from the syntax of the code used for subsetting the rows (and vice versa) means, that you can subset matrix A based on some comparison of matrix B (provided they are the same size).

Or, you can replace values of mat[ , 3] based on some condition concerning mat[ , 2].

That can be very handy!

It may take some time to get the hang of this but we cannot overstate the importance of knowing what the expected outcome of your commands is.

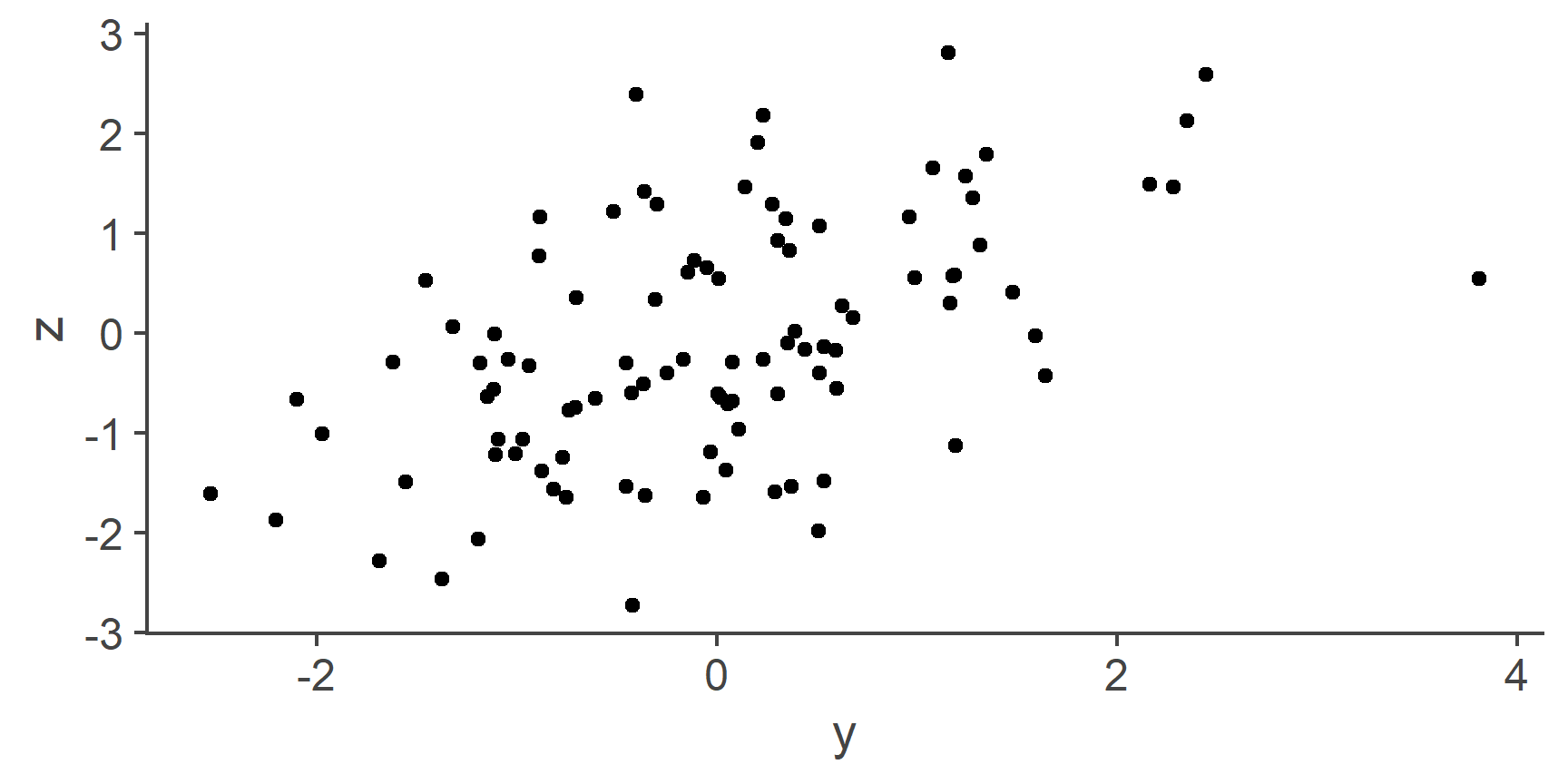

Putting it all together

Now that we’ve discussed the key principles of talking to computers, let’s solidify this new understanding using an example you will often encounter.

According to our second principle, if we want to keep it for later, we must put it in an object.

Let’s have a look at some health and IQ data stored in some data frame object called df:

Now, let’s replace all values of IQ that are further than \(\pm 2\) standard deviations from the mean of the variable with NAs.

First, we need to think conceptually and algorithmically about this task: What does it actually mean for a data point to be further than \(\pm 2\) standard deviations from the mean?

Well, that means that if \(Mean(x) = 100\) and \(std.dev(x) = 15.34\), we want to select all data points (elements of x) that are either smaller than \(100 - 2 \times 15.34 = 69.32\) or larger than \(100 + 2 \times 15.34 = 130.68\).

# let's start by calculating the mean

# (the outer brackets are there for instant printing)

# na.rm = T is there to disregard any potential NAs

(m_iq <- mean(df$IQ, na.rm = T))

[1] 99.99622# now let's get the standard deviation

(sd_iq <- sd(df$IQ, na.rm = T))

[1] 15.34238# now calculate the lower and upper critical values

(crit_lo <- m_iq - 2 * sd_iq)

[1] 69.31145(crit_hi <- m_iq + 2 * sd_iq)

[1] 130.681

This tells us that we want to replace all elements of df$IQ that are smaller than 69.31 or larger than 130.68.

Let’s do this!

# let's get a logical vector with TRUE where IQ is larger then crit_hi and

# FALSE otherwise

condition_hi <- df$IQ > crit_hi

# same for IQ smaller than crit_lo

condition_lo <- df$IQ < crit_lo

Since we want all data points that fulfil either condition, we need to use the OR operator.

The R symbol for OR is a vertical bar “|” (see bottom of document for more info on logical operators):

# create logical vector with TRUE if df$IQ meets

# condition_lo OR condition_hi

condition <- condition_lo | condition_hi

Next, we want to replace the values that fulfil the condition with NAs, in other words, we want to do a little subsetting.

As we’ve discussed, there are only two ways of doing this: indices and logicals.

If we heed principles 5 and 6, think of our code in terms of its output and know what to expect, we will understand that the code above returns a logical vector of length(df$IQ) with TRUEs in places corresponding to positions of those elements of df$IQ that are further than \(\pm 2SD\) from the mean and FALSEs elsewhere.

Let’s check:

condition

[1] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE TRUE

[11] TRUE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[21] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[31] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[41] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[51] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[61] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[71] TRUE FALSE FALSE FALSE FALSE FALSE TRUE FALSE FALSE FALSE

[81] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[91] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[101] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[111] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[121] FALSE TRUE FALSE FALSE TRUE FALSE FALSE FALSE FALSE FALSE

[131] FALSE FALSE TRUE FALSE TRUE FALSE FALSE FALSE FALSE FALSE

[141] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[151] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[161] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[171] TRUE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[181] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[191] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[201] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[211] FALSE TRUE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[221] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[231] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[241] FALSE FALSE FALSE FALSE FALSE FALSE TRUE FALSE FALSE FALSE

[251] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[261] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE TRUE

[271] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[281] FALSE FALSE FALSE FALSE FALSE FALSE FALSE TRUE FALSE FALSE

[291] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[301] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[311] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

[321] FALSE FALSE FALSE FALSE FALSE# now let's use this object to index out elements of df$IQ which

# fulfil the condition

df$IQ[condition]

[1] 20.00000 132.35251 58.85790 132.98465 58.24403 135.92026

[7] 132.92835 130.98682 61.39313 67.00030 135.09655 55.77873

[13] 68.37715

Finally, we want to replace these values with NAs.

That’s easy right?

All we need to do is to put this vector into []s next to df$IQ (or df[["IQ"]], df[ , "IQ"], df[ , 4], or different still, df[[4]]) and assign the value of NA to them:

df$IQ[condition] <- NA

# see the result (only rows with NAs in IQ)

df[is.na(df[[4]]), ]

ID Agegroup ExGroup IQ

10 10 1 1 NA

11 11 1 1 NA

71 71 1 2 NA

77 77 1 2 NA

122 122 1 2 NA

125 125 1 2 NA

133 133 1 2 NA

135 135 1 2 NA

171 171 2 1 NA

212 212 2 1 NA

247 247 2 2 NA

270 270 2 2 NA

288 288 2 2 NASUCCESS!

We replaced outlying values of IQ with NAs.

Or, to be pedantic (and that is a virtue when talking to computers), we took the labels identifying the elements mat[c(FALSE, FALSE, TRUE, FALSE, FALSE), ] of the df$IQ vector, put those labels on a bunch of NAs and burned the original elements.

All that because you cannot really change an R object.

Are there quicker ways?

You might be wondering if there are other ways of achieving the same outcome, perhaps with fewer steps. Well, aren’t you lucky, there are indeedor instance, you can put all of the code above in a single command, like this:

Of course, fewer commands doesn’t necessarily mean better code.

The above has the benefit of not creating any additional objects (m_iq, condition_lo, etc.) and not cluttering your environment.

However, it may be less intelligible to a novice R user (the annotation does help though).

A particularly smart and elegant way would be to realise that the condition above is the same as saying we want all the points xi for which \(|x_i - Mean(x)| > 2 \times 15.34\). The \(x_i - Mean(x)\) has the effect of centring x so that its mean is zero and the absolute value (\(|...|\)) disregards the sign. Thus \(|x| > 1\) is the same as \(x < -1\) OR \(x > 1\).

Good, so the condition we want to apply to subset the IQ variable of df is abs(df$IQ - mean(df$IQ, na.rm = T)) > 2 * sd(df$IQ, na.rm = T).

The rest, is the same:

This is quite a neat way of replacing outliers with NAs and code like this shows a desire to make things elegant and efficient.

However, all three approaches discussed above (and potentially others) are correct.

If it works, it’s fine!

Elementary, my dear Watson! ;)

Functions

Every operation on a value, vector, object, and other structures in R is done using functions.

You’ve already used several: c() is a function, as are <-, +, -, *, etc. A function is a special kind of object that takes some arguments and uses them to run the code contained inside of it.

Every function has the form function.name() and the arguments are given inside the brackets.

Arguments must be separated with a comma.

Different functions take different number of various arguments.

The help file brought up by the ?... gives a list of arguments used by the given function along with the description of what the arguments do and what legal values they take.

Let’s look at the most basic functions in R: the assignment operator <- and the concatenate function c().

Yes, <- is a function, even though the way it’s used differs from most other functions.

It takes exactly two arguments – a name of an object to the left of it and an object or a value to the right of it.

the output of this function is an object with the provided name containing the values assigned:

# arg 1: name function arg 2: value

x <- 3

# print x

x

[1] 3# arg 1: name function arg 2: object + value

y <- x + 7

y

[1] 10The c() function, on the other hand takes an arbitrary number of arguments, including none at all.

The arguments can either be single values (numeric, character, logical, NA), or objects.

The function then outputs a single vector consisting of all the arguments:

c(1, 5, x, 9, y)

[1] 1 5 3 9 10Packages

All functions in R, except the ones you write yourself or copy from online forums, come in packages.

These are essentially folders that contain all the code that gets run whenever you use a function along with help files and some other data.

Basic R installation comes with several packages and every time you open R or RStudio, some of these will get loaded, making the functions these packages contain available for you to use.

That’s why you don’t have to worry about packages when using functions such as c(), mean(), or plot().

Other functions however come in packages that either don’t get loaded automatically upon startup or need to be installed.

For instance, a very handy package for data visualisation that is not installed automatically is ggplot2.

If we want to make use of the many functions in this package, we need to first install it using the install.packages() command:

install.packages("ggplot2")

Notice that the name of the package is in quotes. This is important. Without the quotes, the command will not work!

You only ever need to install a package once. R will go online, download the package from the package repository and install it on your computer.

Once we’ve installed a package, we need to load it so that R can access the functions provided in the package.

This is done using the library() command:

Packages have to be loaded every session.

If you load a package, you can use its functions until you close R.

When you re-open it, you will have to load the package again.

For that reason, it is very useful to load all the packages you will need for your data processing, visualisation, and analysis at the very beginning of your script.

Now that we have made ggplot2 available for our current R session, we can use any of its functions.

For a quick descriptive plot of the a variable, we can use the qplot() function:

If you don’t install or load the package that contains the function you want to use, R will tell you that it cannot find the function.

Let’s illustrate this on the example of a function describe() from the package psych which is pre-installed but not loaded at startup:

describe(df$ID)

Error in describe(df$ID): could not find function "describe"Once, we load the psych package, R will be able to find the function:

Using functions

The ()s

As a general rule of thumb, the way to tell R that the thing we are calling is a function is to put brackets – () – after the name, e.g., data.frame(), rnorm(), or factor().

The only exception to this rule are operators – functions that have a convenient infix form – such as the assignment operators (<-, =), mathematical operators (+, ^, %%, …), logical operators (==, >, %in%, …), and a handful of others.

Even the subsetting square brackets [] are a functionowever, the infix form is just a shorthand and all of these can be used in the standard way functions are used:

2 + 3 # infix form

[1] 5`+`(2, 3) # prefix form, notice the backticks

[1] 5The above is to explain the logic behind a simple principle in R programming: If it is a function (used in its standard prefix form) then it must have ()s.

If it is not a function, then it must NOT have them.

If you understand this, you will never attempt to run commands like as.factor[x] or my_data(...)!

Specifying arguments

The vast majority of functions require you to give it at least one argument.

Arguments of a function are often named.

From the point of view of a user of a function, these names are only placeholders for some values we want to pass to the function.

In RStudio, you can type the name of a function, open the bracket and then press the Tab key to see a list of arguments the function takes.

You can try it now, type, for instance, sd( and press the Tab key.

You should see a pop-up list of two arguments – x = and na.rm = – appear.

This means, that to use the sd() function explicitly, we much give it two arguments: a numeric vector and a single logical value (technically, a logical vector of length 1):

The output of the function is NA because our vector contained an NA value and the na.rm = argument that removes the NAs was set to FALSE.

Try setting it to TRUE (or T if you’re a lazy typist) and see what R gives you.

Look what happens when we run the following command:

We didn’t specify the value of the na.rm = argument but the code worked anyway.

Why might that be…?

Default values of arguments

The reason for this behaviour is that functions can have arguments set to some value by default to facilitate the use of the functions in the most common situations by reducing the amount of typing.

Look at the documentation for the sd() function (by running ?sd in the console).

You should see that under “Usage” it reads sd(x, na.rm = FALSE).

This means that, by default, the na.rm = argument is set to FALSE and if you don’t specify its value manually, the function will run with this setting.

Re-visiting our example with the NA value, you can see that the output is as it was before:

Argument matching

You may have noticed a tiny change in the way the first argument was specified in the line above (coding is a lot about attention to detail!) – there is no x = in the code.

The reason why R is still able to understand what we meant is argument matching.

If no names are given to the arguments, R assumes they are entered in the order in which they were specified when the function was created.

This is the same order you can look up in the “Usage” section of the function documentation (using ?)

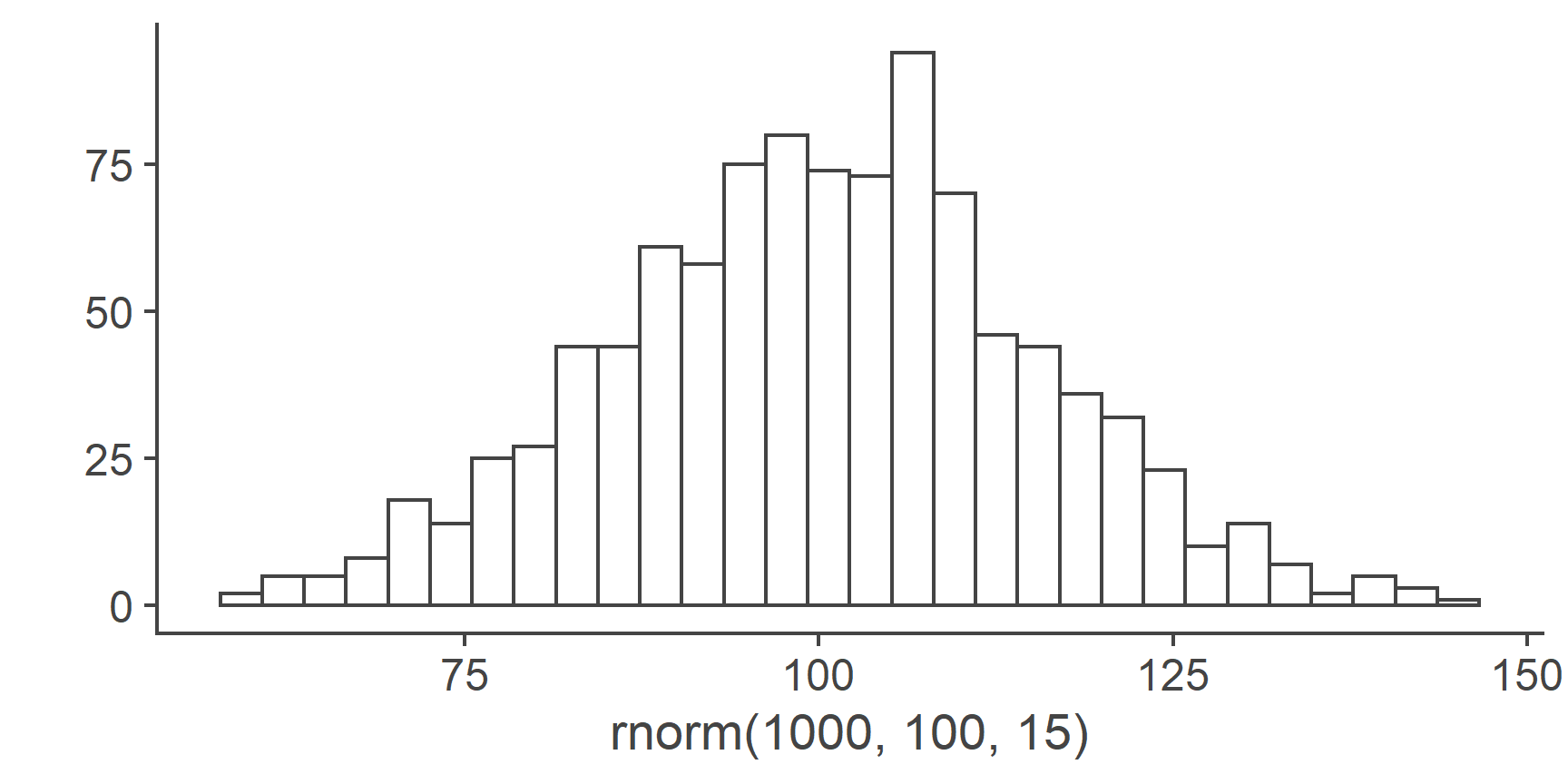

To give you another example, take rnorm() for instance.

If you pull up the documentation (AKA help) of the file with ?rnorm, you’ll see that it takes 3 arguments: n =, mean =, and sd =.

The latter two have default values but n = doesn’t so we must provide its value.

Setting the first argument to 10 and omitting the other 2 will generate a vector of 10 numbers drawn from a normal distribution with \(\mu = 0\) and \(\sigma=1\):

rnorm(10)

[1] -0.7518433 0.3575798 -0.4231625 0.3173492 0.9610531 -0.5929562

[7] 1.1406227 0.5375575 -0.3747863 -2.6891043Lets say we want to change the mean to -5 but keep standard deviation the same. Relying on argument matching, we can do:

rnorm(10, -5)

[1] -4.017823 -6.258214 -5.113746 -4.435332 -6.840417 -5.725059

[7] -4.295440 -4.956638 -4.936408 -4.707679However, if we want to change the sd = argument to 3 but leave mean = set to 0, we need to let R know this more explicitly.

There are several ways to do the same thing but they all rely on the principle that unnamed values will be interpreted in order of arguments:

rnorm(10, 0, 3) # keep mean = 0

[1] -0.5869053 -3.7089712 4.1902430 -0.7493729 -1.1789825 2.8443374

[7] 3.7686362 -2.6126934 1.5321707 -1.3758607rnorm(10, , 3) # skip mean = (DON'T DO THIS! it's illegible)

[1] 2.4504947 5.4091571 3.6503523 1.6291335 -0.3655094 1.0075295

[7] -2.1954416 1.2471465 0.9276066 -6.2682078If the arguments are named, they can be entered in any order:

rnorm(sd = 2, n = 10, mean = -100)

[1] -101.10377 -102.20943 -99.72367 -99.48686 -102.01520 -101.55307

[7] -102.05843 -101.27777 -100.87286 -102.33650The important point here is that if you give a function 4 (for example) unnamed values separated with commas, R will try to match them to the first 4 arguments of the function.

If the function takes fewer than arguments or if the values are not valid for the respective arguments, R will throw an error:

rnorm(100, 5, -3, 8) # more values than arguments

Error in rnorm(100, 5, -3, 8): unused argument (8)That is, it will throw an error if you’re lucky. If you’re not, you might get all sorts of unexpected behaviours:

rnorm(10, T, -7) # illegal values passed to arguments

[1] NaN NaN NaN NaN NaN NaN NaN NaN NaN NaNPassing vectors as arguments

The majority of functions – and you’ve already seen quite a few of these – have at least one argument that can take multiple values.

The x = (first) argument of sample() or the labels = argument of factor() are examples of these.

Imagine we want to sample 10 draws with replacement from the words “elf”, “orc”, “hobbit”, and “dwarf”.

Your intuition might be to write something like sample("elf", "orc", "hobbit", "dwarf", 10).

That will however not work:

sample("elf", "orc", "hobbit", "dwarf", 10, replace = T)

Error in sample("elf", "orc", "hobbit", "dwarf", 10, replace = T): unused arguments ("dwarf", 10)

Take a moment to ponder why this produces an error…

Yes, you’re right, it has to do with argument matchingRinterprets the above command as you passing five arguments to thesample()function, which only takes three arguments. Moreover, the second argumentsize =must be a positive number,replace =must be a single logical value, andprob =is a vector of numbers between 0 and 1 that must add up to 1 and the vector must be of the same length as the vector passed to the firstx =` argument.

As you can see, our command fails on most of these criteria.

So, how do we tell R that we want to pass the four races of Middle-earth to the first argument of sample()?

Well, we need to bind them into a single vector using the c() function:

Remember: If you want to pass a vector of values into a single argument of a function, you need to use an object in your environment containing the vector or a function that outputs a vector.

The basic one is c() but others work too, e.g., sample(5:50, 10) (the : operator returns a vector containing a complete sequence of integers between the specified values).

Passing objects as arguments

Everything in R is an object and thus the values passed as arguments to functions are also objects.

It is completely up to you whether you want to create the object ad hoc for the purpose of only passing it to a function or whether you want to pass to a function an object you already have in your environment.

For example, if our four races are of particular interest to us and we want to keep them for future use, we can assign them to the environment under some name:

ME_races <- c("elf", "orc", "hobbit", "dwarf")

ME_races # here they are

[1] "elf" "orc" "hobbit" "dwarf" Then, we can just use them as arguments to functions:

Function output

Command is a representation of its output

Any complete command in R, such as the one above is merely a symbolic representation of the output it returns.

Understanding this is crucialust like in a natural language, there are many ways to say the same thing, there are multiple ways of producing the same output in R.

It’s not called a programming language for nothing!

One-way street

Another important thing to realise is that, given that there are many ways to do the same thing in R, there is a sort of directionality to the relationship between a command and its output.

If you know what a function does, you can unambiguously tell what the output will be given specified arguments.

However, once the output is produced, there is no way R can tell what command was used.

Imagine you have three bakers making the same kind of bread: one uses the traditional kneading method, one uses the slap-and-fold technique, and one uses a mixer.

If you know the recipe and the procedure they are using, you will be able to tell what they’re making.

However, once you have your three loaves in front of you, you won’t be able to say which came from which baker.

It’s the same thing with commands in R!

This is the reason why some commands look like they’re repeating things beyond necessity. Take, for instance, this line:

mat[lower.tri(mat)] <- "L"

The lower.tri() function takes a matrix as its first argument and returns a matrix of logicals with the same dimensions as the matrix provided.

Once it returns its output, R has no way of knowing what matrix was used to produce it and so it has no clue that it has anything to do with our matrix mat.

That’s why, if we want to modify the lower triangle of mat, we do it this way.

Obviously, nothing is stopping you from creating the logical matrix by means of some other approach and then use it to subset mat but the above solution is both more elegant and more intelligible.

Knowe thine output as thou knowest thyself

Because more often than not you will be using function to create some object only so that you can feed it into another function, it is essential that you understand what you are asking R to do and know what result you are expecting.

There should be no surprises!

A good way to practice is to say to yourself what the output of a command will be before you run it.

For instance, the command factor(sample(1:4, 20, T), labels = ME_races) returns a vector of class factor and length 20 containing randomly sampled values labelled according to the four races of Middle-earth we worked with.

Output is an object

Notice that in the code above we passed the sample(1:4, 20, T) command as the first argument of factor().

This works because – as we mentioned earlier – a command is merely a symbolic representation of its output and because everything in R is an object.

This means that function output is also an object.

Depending on the particular function, the output can be anything from, e.g., a logical vector of length 1 through long vectors and matrices to huge data frames and complex lists of lists of lists…

For instance, the t.test() function returns a list that contains all the information about the test you might ever need:

List of 10

$ statistic : Named num 23.5

..- attr(*, "names")= chr "t"

$ parameter : Named num 99

..- attr(*, "names")= chr "df"

$ p.value : num 2.52e-42

$ conf.int : num [1:2] 4.43 5.24

..- attr(*, "conf.level")= num 0.95

$ estimate : Named num 4.84

..- attr(*, "names")= chr "mean of x"

$ null.value : Named num 0

..- attr(*, "names")= chr "mean"

$ stderr : num 0.206

$ alternative: chr "two.sided"

$ method : chr "One Sample t-test"

$ data.name : chr "rnorm(100, 5, 2)"

- attr(*, "class")= chr "htest"If we want to know the p-value of the above test, we can simply query the list accordingly:

t_output$p.value

[1] 2.523022e-42Because the command only represents the output object, it can be accessed in the same way. Say you are running some kind of simulation study and are only interested in the t-statistic of the test. Instead of saving the entire output into some kind of named object in the environment, you can simply save the t:

Where does the output go?

Let’s go back to discussing factor().

There’s another important issue that sometime causes a level of consternation among novice R users.

Imagine we have a data set and we want to designate one of its columns as a factor so that R knows that the column contains a categorical variable.

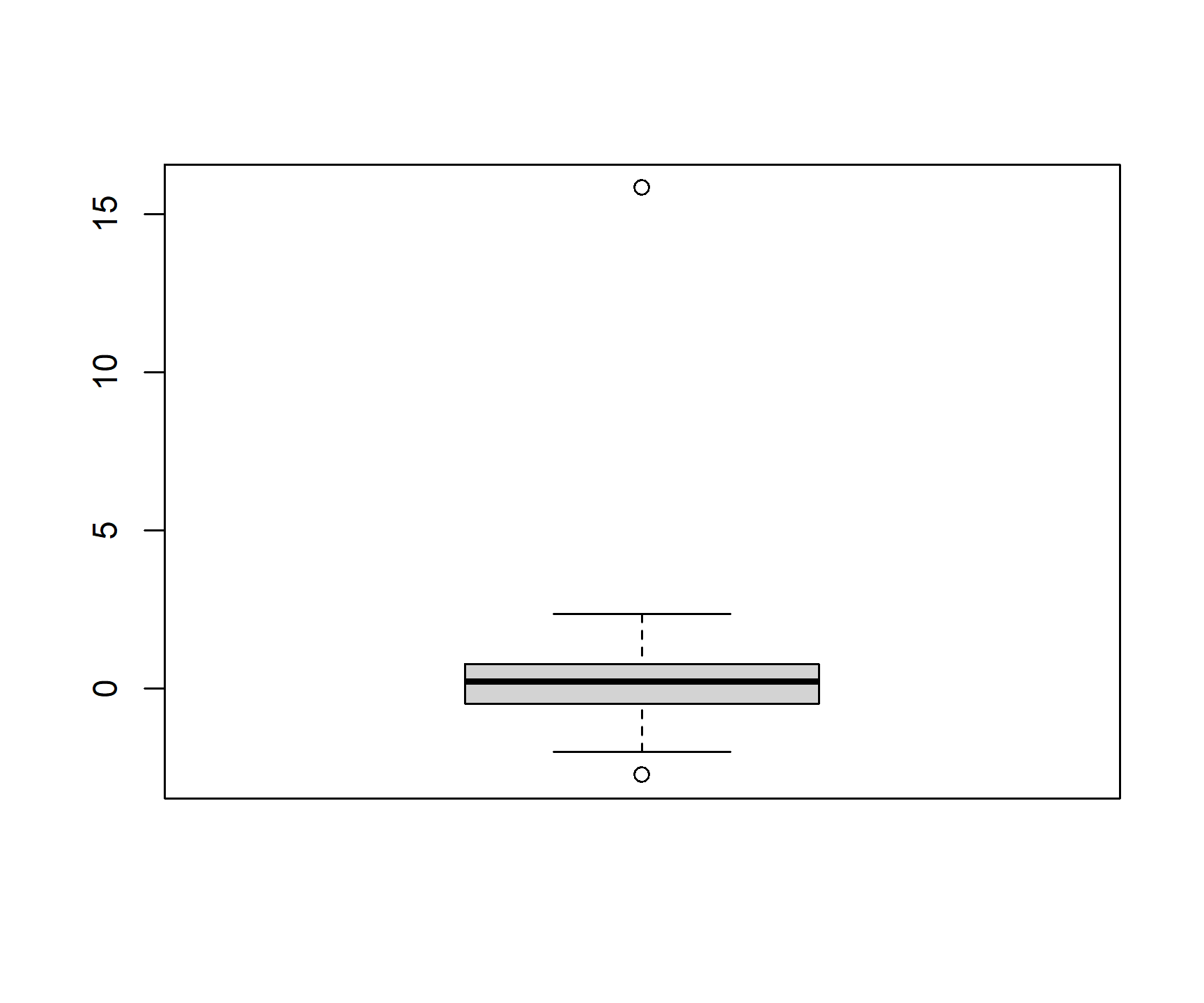

df <- data.frame(id = 1:10, x = rnorm(10))

df

id x

1 1 -0.65035157

2 2 -1.75580846

3 3 -0.01612903

4 4 -1.60192352

5 5 -2.05558512

6 6 -0.91542764

7 7 1.90594739

8 8 -1.11545359

9 9 -0.19819964

10 10 -0.40419480An intuitive way of turning id into a factor might be:

factor(df$id)

[1] 1 2 3 4 5 6 7 8 9 10

Levels: 1 2 3 4 5 6 7 8 9 10This, however, does not work:

class(df$id)

[1] "integer"The reason for this has to do with the fact that the argument-output relationship is directional.

Once the object inside df$id is passed to factor(), R forgets about the fact that it had anything to do with df or one of its columns.

It has therefore no way of knowing that you want to be modifying a column of a data frame.

Because of that, the only place factor() can return the output to is the one it uses by default.

Console

The vast majority of functions return their output into the console.

factor() is one of these functions.

That’s why when you type in the command above, you will see the printout of the output in the console.

Once it’s been returned, the output is forgotten about – R can’t see the console or read from it!

This is why factor(df$id) does not turn the id column of id into a factor.

Environment

A small number of functions return a named object to the global R environment, where you can see, access, and work with it.

The only one you will need to use for a long time to come (possibly ever) is the already familiar assignment operator <-.

You can use <- to create new objects in the environment or re-assign values to already existing names.

So, if you want to turn the id column of df into a factor you need to reassign some new object to df$id.

What object?

Well, the one returned by the factor(...) command above:

df$id <- factor(df$id)

As you can see, there is no printout now because the output of factor() has been passed into the assignment function which directed it into the df$id object.

Let’s make sure it really worked:

class(df$id)

[1] "factor"Graphical device

Functions that create graphics return their output into something called the graphical device.

It is basically the thing responsible for drawing stuff on the screen of your computer.

You’ve already encountered some of these functions – plot(), par(), lines(), abline().

Files

Finally, there are functions that can write output into all sorts of files.

For instance, if you want to save a data frame into a .csv file, you can use the read.csv() function.